Hi,

I recently have upgraded some ODA lite servers from the appliance software 18.8 to 19.6 and afterwards to 19.9 and, as usual, there are some findings I can and want to share with you, guys.

Typically I do see ODA upgrades by other Oracle consultants running perfectly - or with some small little issues. Luckily, I can learn more if things do fail and one is doing a deep dive into the root causes. And again, we have learned a lot this time - and maybe it can help you preventing to learn things by yourself.

Let's start with findings for the upgrade from appliance software 18.8 to 19.6.

1.) ODABR

ODABR is the Backup and Recovery tool for the Bare Metal ODA and available at myoraclesupport. It is already mentionend in the documentation that it must be installed before the upgrade. Unfortunately, it is not part of the upgrade software itself, so one has to download and install it. It is really useful and you should use odabr backup -snap BEFORE every upgrade! I had to restore the system using odabr at 19.6 to 19.9 server-upgrade.

For the OS upgrade, odabr is doing the snapshot by itself, so there is no need to do one manually before.

Check for space issues if you have resized the /u01 partition using lvm - you are able to use odabr manually (it is also documented at the patch documentation) with smaller sizes even if the automatic snapshot fails.

2.) ld-linux.so.2 segfault at 0 ip XXXXX errors at the Pre-Check for OS Upgrade (odacli create-prepatchreport)

As you may know, the ODA is running on Oracle Enterprise Linux 6 with the appliance software 18.8 and with Oracle Enterprise Linux 7 with the appliance software 19.6. The pre-check is originally an upgrade tool from Red Hat and collects a lot of data.

While the job is running, I have seen different segmentation fault errors - all in ld-linux.so.2 at the console and at the linux log. The job itself is running successful. If you check all other Linux logs (like /var/log/messages, the dcs-agent.log, etc.) this segfault can not be found for any "normal" ODA operation. Just for the precheck itself.

With help of Oracle we have seen that it is safe to proceed to upgrade the OS at this point. All libraries of Oracle Linux 6 will be replaced with Oracle Linux 7 ones. By the way - I have seen some heartbeat messages at the console after the upgrade to 19.6 - but they are gone after the upgrade to 19.9.

3.) Network issues after OS Upgrade (sfpbond vlans down)

Our customer is running with two different vlans using a public bridge (as they do use KVM) over the sfpbond. The upgrade itself was fine. The sfpbond1.200 and sfpbond1.3001 vlan were down after the upgrade. They were missing an "ONPARENT=yes" at the ifcfg-scripts. After adding this parameter, the bond was working fine with the vlans. OEL 6 didn't need these to run, but this was a misconfiguration made by ourselves (as the deployment with 12.1 wasn't supported with vlan/kvm by odacli).

4.) After the OS Upgrade, do a new ODABR backup - I didn't needed it, but you should have it.

Next to the 19.6 to 19.9 upgrade.

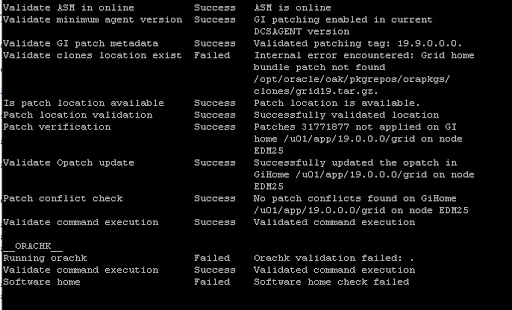

1.) It is essential that you DO a ODABR backup before you start with the upgrade to ODA appliance software 19.9. The prepatch-report WILL fail (at least at my ODAs it fails at a 100% rate), so don't get frightened about it. There are two different things you also can see here at the screenshot of my describe-prepatchreport output:

The first is that the validate clones location exist check fails due to a grid home bundle patch not found. /opt/oracle/oak/pkgrepos/orapkgs/clones/grid19.tar.gz. This error can be safely ignored as this package is used for the upgrade to 19.6 only and you may/surely have made an odacli cleanup-patchrepo before ;-).

The second thing is that the orachk validation failed and as a consequence also the Software home check failed. This is something already known. You have to use the "-sko" (which means skip orachk) parameter for your odacli update-server run.

2.) Another issue raised up at the upgrade server state. The ILOM patch was not successful. I had to revert to the last ODABR snapshot, to reboot the server, to create a new snapshot backup with ODABR and to patch the ilom manually with the right ilom patch. The reason why this happens is that Oracle has changed something at the deployment of the ilom patch. You now need to have port 623 open (there is a support.oracle.com note for this) between the public ODA interface and your Ilom IP addresses for IPMI over UDP.

I have checked that with the customer - they say the port is open and nc - well, see yourself:

[root@YYY ~]# nc -z -v -u 172.17.X.XX 623

Connection to 172.17.X.XX 623 port [udp/asf-rmcp]

succeeded!

But the ILOM upgrade still fails. The root cause for this is unknown as the appliance should try two different ways. The first is using IPMI over UDP, because it is faster, if this fails the second try should use the internal bus, but it stops before the second way is tried.

3.) KVM machines are not running

Yes, after you have upgraded to 19.6 or 19.9 your 18.X VMs will not run if you use

virtsh start edm38 (where edm38 is my machine name)

=> error: Failed to start domain edm38

=> error: Cannot check QEMU binary

/usr/libexec/qemu-kvm: No such file or directory

The reason for this problem is clear - qemu has changed with Oracle Enterprise Linux 7. The old qemu-kvm was replaced by qemu-system-x86_64. Also the machine types have changed (e.g. rhel6.6.0 does not exists anymore).

You need to edit the virsh configuration of your machines. Use virsh edit as vi does not work with the xml description files.

First select the machine type you want to use by running qemu-system-x86_64 -M ?

Personally I have picked just "pc" as it is an alias of the newest pc-i440fx-3.1 machine type.

Second find all your machine names with virsh list --all (virsh list does show only running VMs):

Third run virsh edit MachineName and change the following lines:

FROM <type arch='x86_64' machine='rhel6.6.0'>hvm</type>

TO <type arch='x86_64' machine='<YourMachineType'>hvm</type>

In my case I changed it to:

<type arch='x86_64' machine='pc'>hvm</type>

And also change the line

FROM <emulator>/usr/libexec/qemu-kvm</emulator>

TO <emulator>/usr/bin/qemu-system-x86_64</emulator>

Don't forget to change the folder to /usr/bin as qemu-system-x86_64 is not in /usr/libexec anymore.

Save the file and exit the editor - now you should be able to start the vm with virsh start MachineName.

4.) Creating a new database fails in the step of configuring the network (the database itself is created and configured, but the odacli create-database job failed with "Failed to associate network").

As I have only vlans on these machines, I think it will have to do something with that and that I have upgraded the servers from a 12.1 deployed more than 3 years ago. Maybe there isn't something configured like it is done with newer deployments using the VLAN commands provided these days.

I wasn't investigating that much, what the root cause of this error is. The database wasn't registered with the listener (and I wasn't also not able to add the database configuration and add a network with odacli), so I changed it manually

What was doing the trick on my database was to add the right "listener_networks" entry:

alter system set listener_networks='((NAME=net1)(LOCAL_LISTENER=(ADDRESS=(PROTOCOL=TCP)(HOST=172.16.XXX.XXX)(PORT=1521))))' scope=both;

These were my findings, I hope you don't get them. But if you get them, my little post will maybe help you out.